Musicorum Galaxy

What are our musical galaxy's 7 biggest star systems?

And how robots may be changing music production and consumption

If you're a music geek, you know where to position your listening habits into one of the seven biggest star systems of our galaxy of music genres.

Of course, you don't have to be listening only to one broad genre of music or another. In fact, it would be hardly possible - music genres themselves don't exactly focus only around one or two other genres for some inspiration.

A network analysis of most music genre entries that you can find on Wikipedia reveals how much any single one interacts with many others.

After a gruesome and lengthy data scraping and cleaning operation, one can opine from the visualisation below that it would be very hard to untangle every music genre's role and contribution in the evolution of music.

You can find below an interactive data visualisation that is, at this point, probably more good-looking and eye-catching than informative (play with it with your mouse and move it around so you can explore this galaxy-like network analysis).

Dots are music genres, while links, or edges, either lead to genre's stylistic origins, derivative forms, or subgenres and fusion genres. Hover over any dot to see which music genre that dot is (works much better on laptop/desktop).

Our musical galaxy, based on Wikipedia data. Rather a piece of data art than an informative and insightful look into the evolution of music though. You can drag by holding your mouse's right button, rotate the whole thing with the left one, zoom in and out with scrolling, and hover on the dots to see which music genre a given dot is.

In short, this illustrates the history and evolution of music, but in an organic fashion rather than a timeline-based one.

An important note on the data though. The data comes from English-language Wikipedia entries (full documentation here), so this collection of music genres may, of course, underrepresent genres that are less known among the English-speaking world, or be Anglo-Saxon-centric.

In other words, this is, of course, an incomplete picture of all the music there is out there. And it also relies on music genres whose Wikipedia article had an infobox on the upper right corner, or music genres that were mentioned in another genre's infobox.

But when I use another clustering algorithm, and hence a different visualisation, the colours reveal a whole new story. Most genres you will be able to think about will probably fit into one of the seven clusters below:

Just play with this visualisation below now, and see all the genres and subgenres that derive from their main dot by clicking on them.

Same data, a different clustering / community-finding algorithm (Louvain), but much clearer insights.

It is interesting to see that...

Which musical star systems can you see in our Musicorum Galaxy?

...

...

...

Well, it depends where you look: the galaxy is vast

🤷🔭🌌

Of course, there are other clusters or genre communities as well, or "star systems", to add to the galaxy analogy. In fact, there are 27 of them, many of which are hard to spot above due to their smaller importance in the English-language Wikipedia linked data.

Three of them are small but easy enough to spot and have many associated genres; they are Samba, Dangdut and Calypso, which are easier to spot in this waffle chart below, in which one music genre equals one square, simply.

After the Musicorum Galaxy... the Musicorum Waffle

(See a more zoomable version here, especially for mobile devices, and the list of all clusters of music genres here)

One can notice as well the absence of Classical music, which can hardly be thought to be hiding inside one of the broad genres above. This is because classical music genre entries in Wikipedia do not have their information presented in the same way, so the web scraper did not grab much from the music of Mozart, Brahms or Shostakovich.

In all cases, it depends on the data source and the data itself. In 2013, Glenn McDonald, a data alchemist 🧙📊 and music taxonomist at Spotify carried an analysis of the 'centrality' of genres, from The Echo Nest data, where he was employed back then.

He has been looking at relationships and influences between genres and visualised all of them on his site EveryNoise.

But in an email conversation, McDonald clarifies how any "centrality" exercise brings little insight nowadays:

"As we expand into more countries farther from the US, our view of the world expands, and the notion of a centre is anything but an arbitrary mathematical sense becomes increasingly irrelevant. In some places, the centre is Cumbia. In some it's Schlager", McDonald says.

An organic rather than hierarchical picture of the sounds we enjoy

Arguably, this isn't the first attempt to draw some genealogical tree or timeline of the evolution of music genres.

But many similar exercises seem to rely on one or a few individuals'manual and editorial labour, while the visualisation above comes from the crowdsourced data that is Wikipedia.

Also, because the world is so vast and interconnected now, ordered hierarchies make less and less sense and are increasingly an arbitrary exercise.

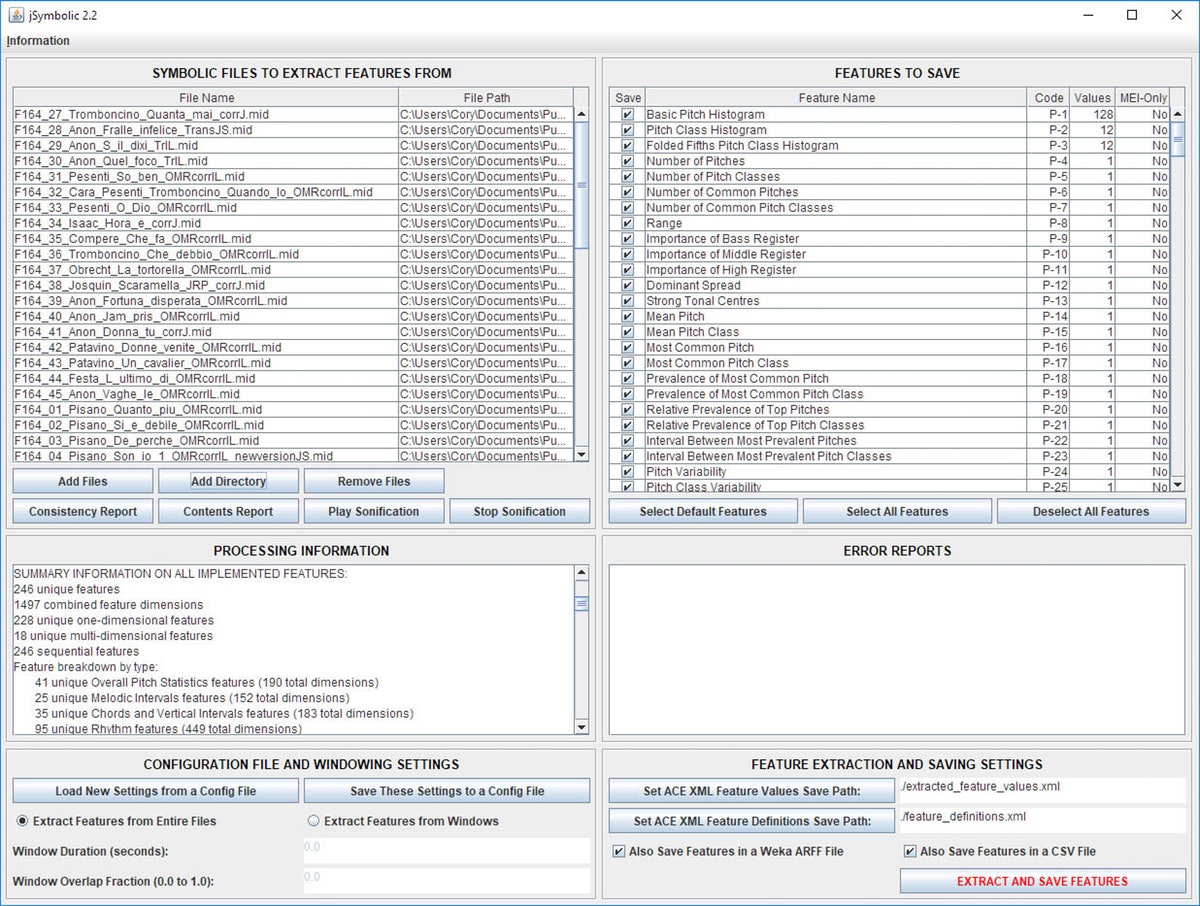

"Definitely, I think [hierarchical] trees are what people have traditionally done when they're trying to represent [the evolution of music] because it's a convenient approach", says Cory McKay, a professor at Marianopolis College in Montreal, a member of the Centre for Interdisciplinary Research in Music Media and Technology at McGill University and a private research consultant specialising in music and machine learning.

"I found in my own work that this can be suitable on a very zoomed out scale, but if you really want to have anything that [is better at ultimately representing the severalties] of what's going on between genres, you really need something that's not hierarchical, because you have all kinds of feedback loops, you have genres that still exist, and you have entirely separate genres that influenced that genre, and then there are separate other influences [...]", he adds.

But thanks to machine learning, we can now do what neither a handful of conventional observers nor an online crowd of enthusiasts can address: finding unexpected pattern similarities between genres.

Regardless of their century of birth.

Machine learning, statistics and centuries-old music genres: Villota has long been deserving some credit for Madrigal music

Dr McKay and his colleagues at McGill are in the process of creating the Google Books of music scores.

In short, the SIMSSA project aims to make old music from the 15th to the 17th centuries available and searchable as in a database to see and discover patterns.

While developing the infrastructure, they also have been able to do some music research with it, one of which has shown unexpected patterns in a music genre that emerged in the early 16th century in Italy.

"We were looking at the different genres and styles that led to the Madrigal, and we found some interesting and somewhat unexpected links by doing machine learning on the actual musical data", explains McKay.

The research states that "[the] Villotta emerges as an important genre for the origins of the madrigal – even though it has almost never been considered in this role before."

So Villota would deserve some credit for the existence of Madrigal music (an edge to the latter's dot in the visualisations above).

The project also made it possible for him and his colleagues to correct composer misattributions.

"Especially from that period, there's a lot of pieces of music for which we don't know who the composer is, so we try to look for patterns, again using machine learning, to see that a given piece was probably composed by this composer, or that some other piece is misattributed."

Robots that are into music classification, curation... and production?

There is also another thing that a crowd cannot achieve: automate the classification of artists as rapidly as they are doing it at Spotify and other organisations.

This is something that Glenn McDonald has been doing for at least 5 years now.

"I got into genre categorisation by accident when the Echo Nest needed to do a better job of recommending music for genre requests, and I figured out a way to do that by only manually identifying a few key artists for each genre and then using math to extrapolate others", he says.

"There were about 300 genres in that initial effort, and as you know, that turns out to have been a small beginning."

Indeed, as Spotify now has close to 2,000 music genres in its database.

So this makes Spotify's and Echo Nest's database a probably more interesting resource for music genres, having metadata on the track for things like rhythm, tempo, "dancability", aggressiveness and other characteristics. It does not have historical information.

But Glenn insists the human and robot methodology is not perfect, and there is still some criticism:

"[Of] course, there are various forms of resistance [to it]. The automated processes are often wrong, slightly or baldly, and not everyone accepts error-rates with the same cheer. I have a very broad view of what can constitute a genre, and some people have stricter definitions."

"It's worth reiterating, though, that none of Spotify's genre efforts is unattended. All of them begin with humans deciding that a genre is a thing, choosing a name for it in our namespace, and picking some artists that define what we mean by it."

"But from my viewpoint, the eventual goal is to model every shared listening impulse in the planet, and thus help the fans of anything find more of it (and makers of it find more listeners), and maybe help curious listeners find things they don't yet know they would love."

Are algorithms taking more place in the creation of music?

While music genres interact with each other through musicians' everyday work, there are also people that are experimenting with the mixing and blending of music genres' by targeting their specific features.

Jason Hockman is an Associate Professor at the School of Computing and Digital Technology at Birmingham City University, as well as an electronic music producer. Like Cory McKay, he has a research interest in music information retrieval and the study of audio characteristics within songs.

He and his colleagues are currently carrying research in the hope of developing a tool that would automate the process of mixing a song's genre-specific features with another song of a different genre.

Jason tells me the tool is at the experimental stage, and that other researchers elsewhere are attempting to develop something similar as well.

We also discussed hit song detection and production, something he isn't himself into but that an increasing amount of organisations are attempting to do.

It's about feeding an algorithm with songs that have been a hit and some that haven't, so it can predict whether a song will find a place in the charts and, ultimately, provide recipes for industrial music production.

While hit song prediction may be promising, musicians and producers shouldn't fear to lose their jobs to robots in the foreseeable future, believes Dr McKay:

"Definitely there's been some efforts either in automatic songwriting or production, but how successful they've been, it's hard to say. Any company will always say their software is brilliant and it works really well, but [...] you don't know [what are their criteria] and what they are doing."

"In practice, I don't know how widely used they are. Humans are very, very much still involved in the production of music and decisions that are being made, although having said that, marketing research is certainly something that is involved in deciding how music is made and what's produced and you will get."

But McKay tells me about some aspect of music consumption that is certainly getting more and more automated, and the impact of this on people's tastes is anyone's guess.

"Curation is something that where many people are less involved, like for radio stations, instead of having one DJ or more in every station, deciding what they're going to play, you'll have centralised [decision-making higher in a given organisation owning many stations], and some of them are auto-generated as well, especially with Internet radio."

And then we have been wondering whether automatic curation would be detrimental for the discovery of new or innovative music, either curation from centralised radio stations or streaming services.

He certainly thinks there is a reinforcement effect to expect, the level of which will depend on where you listen to your music.

"To a certain extent, you can set up systems so that they encourage the introduction of new things every so often so that you play mostly what's similar and then you choose something that that person seems to have never heard of that is a bit less known."

"But it creates sort of an interesting feedback loop, in the sense that if the system is either a record company or [some other interested party], and it's telling you that these are the things you want to listen to, and then those are the things you listen to, the reinforcement [is that someone will think] 'oh yes, these are the things that I want to listen to, because I don't have anything else to listen'."

But not all of this is about programmers deciding what the people will listen to, as recommendation systems are also based on the behaviour of actively-browsing music listeners that will inspire recommendations for those who aren't as actively browsing but share similar tastes.

"As long as there are some people who are going up there and making their own efforts to find new music and listen to new kinds of things, then the insights that they find or the music they discover can be spread to other people with similar tastes by the system."

But other than that, he thinks it's hard to know whether the industry is unofficially limiting tastes in a way that's contrary to what people want or they are doing that because that's simply what people want to listen.

"People do seem to like music that is similar to what they already like. There's been a real convergence, people listen to much less variety now that they did 20 years ago, and that's variety in terms of different pieces, of the different artists and also regarding the genres", he says.

"[Labels then] can produce less music, they can focus on fewer artists which means the production process costs less, they don't have to spend lots of marketing on different people, so it's great for them. But if people really wanted a greater variety of music, then, they would demand a greater variety of music, but they seem to be satisfied and most people seem to be satisfied with that", he concludes.

How do music genres evolve now, in an interconnected world?

"The most reliable source of new genres, I think, remains cultural variation. Music from one place gets heard in other places, and then the people in those places start to make a version of it themselves, which is rarely the same as what they heard. So we get Reggaeton, or Alt-Idol, or Gqom, and so on", says McDonald.

"One thing that I think is interesting is that it's so easy now to sample sounds, with a phone you can sample music very easily. There's a culture of sampling. And of course, you can use the samples in your music. Then maybe people are paying attention to sounds in a different way."

"Because you can very easily just take your phone and say 'Oh that's an interesting sound, I'd like to use it in my music'. There's that possibility whereas it would have been much harder in the past to have that possibility in your pocket. Your phone records the sound with very good quality."

But now smartphones are increasingly used in some orchestras. Dr McKay is also the director of Marianopolis College's Laptop Orchestra, or MLOrk.

Laptop orchestras are part of a trend that started at Princeton University and expanded to other universities like Stanford, Concordia and Oslo (SLOrk, CLOrk and OLO). So naturally, mobile phones were the obvious next addition.

And there are many music genres that are the children of the Internet and online communities.

An obvious and funny example is Vaporwave, Jason Hockman taught me, which is the act of overly slowing down and altering a bit any sound or bunch of sounds that are more or less musical, either from sounds like the opening of Windows 95 or your local shopping centre's background sound.

Another example is VGM, or the art of remixing/rearranging/covering music from video games in an interpretative way. The genre wasn't born in any specific physical place, although it is very prominent in the US, but in no particular city.

Going back to the automation of everything, thinking about all this, with automatic music genre mixing, hit detection and maybe production, and curation, if I were an entrepreneur or a startup launcher, I would probably try to automate DJing with some software that reads and understands the mood and feel of a crowd on the dance floor, and adjusts or changes the music or its features when they're not dancing or seemingly happy enough. Or not. 🔚